NVIDIA’s RTX cards are a gamble on the future of gaming

And that’s nothing to do with ray-tracing.

NVIDIA's RTX series of GPUs has been a long time coming. The company's last meaningful hardware revision, the 10 series, came out back in May 2016. And real-time ray-tracing, the intensive rendering technique that RTX cards purportedly make a reality, has been dreamed about for decades. But, although it hasn't dominated the headlines as much, the most important change RTX brings is the shift away from raw power and towards algorithms and AI.

But, I'm getting ahead of myself. First, let's have a quick look at what exactly NVIDIA is trying to sell you. Next week, two cards, the $700 RTX 2080 and $1,000 RTX 2080 Ti, will be vying for your cash, followed in October by the RTX 2070, which at $500 is likely to be the best seller of the three.

Starting at the bottom, in terms of raw power, the RTX 2070 is roughly equivalent to the GTX 1080; the RTX 2080 goes toe to toe with the GTX 1080 Ti; the RTX 2080 Ti is in a league of its own. The 2070 and 2080 have 8GB of GDDR6 RAM; the 2080 Ti has 11GB. All three are based on the company's new Turing architecture, which means they have cores dedicated to AI (Tensor) and ray-tracing (RT).

GTX 2080 Ti | GTX 2080 | GTX 2070 | |

|---|---|---|---|

CUDA cores | 4,352 | 2,944 | 2,304 |

Tensor cores | 544 | 368 | 288 |

RT cores | 68 | 46 | 36 |

Memory | 11GB GDDR6 | 8GB GDDR6 | 8GB GDDR6 |

Memory bandwidth (GB/sec) | 616 | 448 | 448 |

TFLOPS | 13.4* | 10* | 7.5* |

Price | $999* | $699* | $499* |

Expect a fourth card, likely the RTX 2060, to bring the entry price down significantly in the coming months, followed by a slew of cut-down options for budget-minded gamers (the 10 series made its way down to the sub-$100 GTX 1030). There's also room at the top end for expansion: The RTX 2080 Ti Founders Edition can handle 14.2 trillion floating-point operations per second (TFLOPS), while the Turing TU102 chip these new cards are based on pushes that figure up to 16.3 TFLOPS. That's achieved through a mix of higher clock speeds and more CUDA cores (the 2080 Ti has 4,352, the fully configured TU102 has 4,608.)

RTX also arrives with a lot of under-the-hood improvements. There's a faster caching system with a shared memory architecture, a new graphics pipeline and concurrent processing of floating and integer calculations. If that means nothing to you, don't worry too much: The takeaway from that word soup is not only does the RTX range have more raw power, but it uses that power more efficiently.

And that's the key here. Ray-tracing stole the headlines, and I'm intrigued to see how developers use it, but it's efficiency that really excites me about RTX.

The ultimate goal of a game system, be it a $2,000 gaming PC or a $300 Nintendo Switch, is to calculate a color value for each pixel on a screen. Even a simplified guide on how a modern graphics pipeline does this would run the length of a novella, but here's a three-sentence summary: CPUs aren't made to render modern graphics. Instead, a CPU sends a plan for what it wants to draw to a GPU, which has hundreds or thousands of cores that can work independently on small chunks of an image. The GPU executes on the CPU's plan, running shaders -- very small programs -- to define the color of each pixel.

The challenge for both graphics-card manufacturers and game developers, then, is scale. That $300 Switch, in portable mode, typically calculates 27-million pixel values a second, which it can do just fine with a three-year-old mobile NVIDIA chip. If you're targeting 4K at 60FPS (which is what many gamers buying RTX cards want) your system needs to push out close to half-a-billion pixels a second. That puts a huge strain on a system, especially when you consider that your PC isn't just picking these colors out of thin air, and is instead simulating a complex 3D environment in real time as part of the calculations.

There are already plenty of techniques used to reduce that strain. One is rendering all or parts of a scene at a lower resolution and stretching the results out. This is super obvious when you have a game running at 720p on a 1080p screen, but less so when, say, a fog cloud is being drawn at quarter-resolution. And that's what NVIDIA's optimizations are all about: cutting down the quality in places you won't notice.

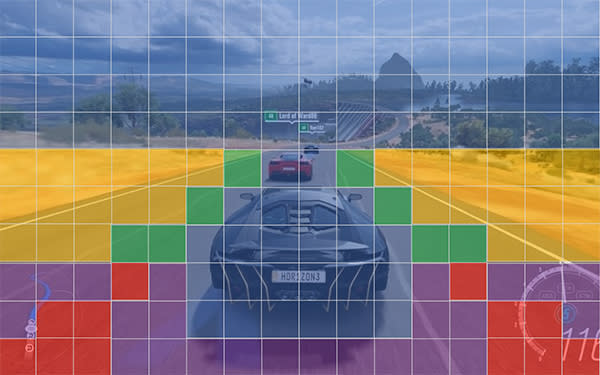

NVIDIA's new graphics pipeline can employ several new shading techniques to cut corners. In many ways, this builds on less-flexible power-saving measures utilized for VR, like MRS (multi-resolution shading) and LMS (lens-matched shading). In the image above, you're seeing a GPU breaking a scene down into a grid in real time. The uncolored squares are high-detail, and shaded at a 1:1 ratio, just like a regular game scene. The colored ones don't need the same level of attention. The red squares, for example, are only shaded in 4x4 pixel blocks, while more-detailed but non-essential blue squares are shaded in 2x2 blocks. Given the low detail level of those areas of the image, the change is essentially unnoticeable.

You can take this basic concept, that pixel-shading rates don't have to be fixed throughout an image, and apply it in targeted ways. In racing games your gaze is basically fixed on your car and the horizon. The pixels in the central and top half of the screen could be filled in at 1:1, but the corners could be 4x4. (With 2x2 and 2x1 blocks in between easing the transition, of course.) This, NVIDIA says, will basically be imperceivable in motion and decreases the load on the shading cores, allowing for higher frame rates.

NVIDIA is working on more advanced shading techniques that will, for example, allow developers to reuse texture shading over multiple frames or change the quality of shading on moving objects that your eyes can't resolve. They're all efficiency plays, intended to squeeze more out of the same hardware. One example shown to press last month at the RTX launch had Wolfenstein II: The New Colossus running with adaptive shading: NVIDIA said it could provide a 15-to-20-percent improvement in frame rates with negligible image compromises.

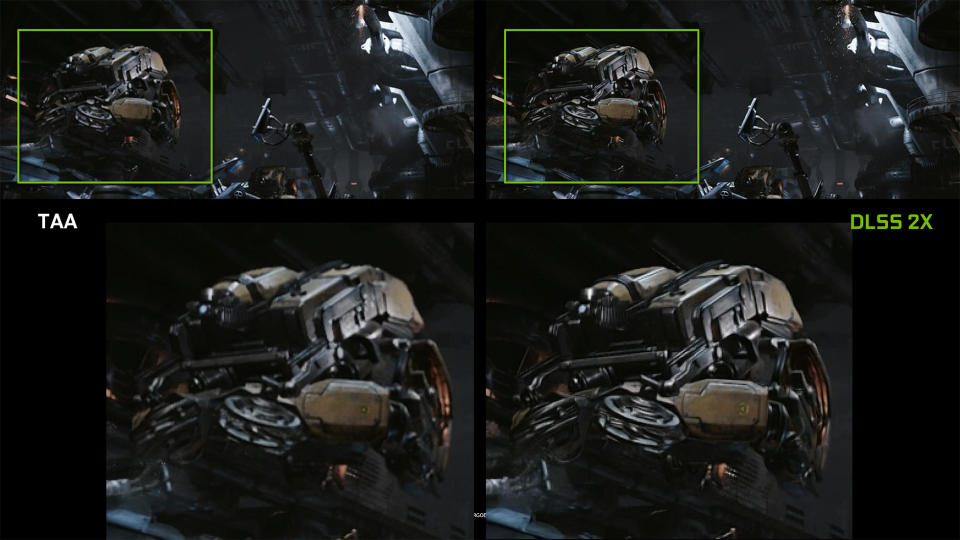

But what if you could remove a load from those shading cores entirely? That's the concept behind DLSS (deep learning super sampling). DLSS is a new form of anti aliasing (AA), an effect applied to games that smooths out rough edges. It doesn't run on CUDA cores at all and, instead, utilizes AI and the new Tensor cores.

For DLSS, NVIDIA created a game-specific algorithm using a supercomputer. Again, simplifying the explanation, the supercomputer looks at ultra-high-resolution images and compares the data to a low-resolution version. It will then try to fail millions of times to find a way to make the low-resolution image look like the ultra-high resolution one. Once it succeeds, NVIDIA packages the algorithm for the game and sends it out via its GeForce Experience app for gamers to use.

In practice it looks, mostly, great. The results are typically sharper than the TAA (temporal anti aliasing) you see in many modern games. Like all AA techniques, it has its strengths and weaknesses: It's unbelievably good at resolving fine detail while straight edges aren't always flawless. Although I personally prefer it to any TAA implementation I've seen, the fact that it exists is probably enough: This is free AA. Especially if you're the sort to run something like MSAA, you can save a huge amount of GPU power for results that are very comparable.

Like all AA techniques, DLSS has its strengths and weaknesses.

The major limit to DLSS is compatibility: NVIDIA needs to create a custom algorithm for each game. It will do this for free, if the game's developer is interested, but there's no saying how many will take the company up on its offer. NVIDIA typically doesn't see fantastic adoption rates for hardware-locked features like HairWorks. But DLSS purportedly requires little-to-no work on the developer's part, so maybe it'll catch on. There is a handful of games offering support close to launch, including Final Fantasy XV, Hitman 2, PUBG and Shadow of the Tomb Raider.

Finally, NVIDIA showed an extremely impressive demo of an asteroid field filled with geometry. Instead of the CPU asking the GPU to draw each asteroid, the CPU sent along a list of objects. This list was then processed, drawn and shaded across thousands of cores. This new method of CPU-GPU communication largely eliminates a common bottleneck that tanks frame rates when too much is going on in a game and will also allow an increase in scene complexity.

The techniques shown in the demo could also change the way developers approach LODs (level-of-detail settings). LODs define the distance at which objects and textures are loaded into a scene. While typically statically defined on consoles, on PC you often get a choice of low, medium, high and so on. Play a game on epic settings, and you'll see grass, trees, buildings and the like rendered to the horizon. On low, only a small portion of foliage will be rendered in, and distant buildings might be missing or replaced by low-polygon placeholders.

Level of detail is integrated into the list the CPU sends the GPU: The developer creates numerous quality assets, and the GPU then constantly scans the scene to dictate which are displayed at any given time, based on the size of the object on the screen. This allows for a high-quality asset to decrease to crude geometry when it's only taking up a few pixels. This technique could be adapted to follow the principle of dynamic resolution, adjusting the quality of non-crucial objects, rather than just raw resolution, to improve performance further.

All of these new performance-improving tactics -- there are more in NVIDIA's white paper than I've just mentioned -- are a much bigger deal for the next few years of gaming than ray-tracing. Although we'll have to wait for reviews to start rolling in, it's likely that, with the hardware we have right now, real-time ray-tracing is just going to offer a minor visual embellishment. But the benefits of DLSS and variable shading are immediate and go beyond the three RTX cards, and even beyond NVIDIA.

The idea that you don't need to keep on boosting power is compelling. We're approaching the limit of what we can do with silicon, and we've already seen the yearly boosts in raw power slow from a leap to a shuffle.

"The cloud" is often cited as a nebulous fix to this, but it can't be the sole answer. Shifting the load away, once the world is bathed in high-speed internet access, can help, but, ultimately, data-center designers don't have access to hitherto undescribed exotic materials and will come up against the same performance constraints as consumers. As display manufacturers have an unquenching thirst for resolution increases, and humans have an unquenching thirst for buying incrementally better things, we need to find more intelligent ways to render our games.

It's difficult to see graphics cards being able to render an 8K game in real-time without the kind of AI and pipeline improvements NVIDIA is showing off to promote its new cards. Of course, these advances won't only come from NVIDIA; we need to see AMD GPUs that offload AI busy work, and Microsoft and Khronos have to integrate these new ideas into DirectX and Vulkan. (To that end, NVIDIA says it intends to get its advanced shading tech added to DirectX.)

As the companies involved in rendering our games come up with more ways to save power, the benefits will be felt industry wide. The same tech that lets the 2080 Ti hit 4K at 60FPS could help power, for example, a Switch 2 that's far less compromised on the go. Given NVIDIA already makes the chip in the Switch, there's little-to-no reason why a future console from the pair couldn't add in some Tensor cores that drastically decrease the number of pixels it needs to shade, or run a super-sampled AA pass to mask a low rendering resolution. Just yesterday, Apple announced its new phone chip has eight cores dedicated to running AI -- this shift towards more intelligent computing is coming, and all gamers will benefit.