Recently I decided to move my cloud native workloads from my primary datacentre in Utrecht to the secondary in Southport, UK. After getting NSX-T up and running in my nested compute cluster, it was time to begin the installation of Pivotal Container Service (PKS). As PKS 1.1 had just been released, it made sense to go with the latest version.

Recently I decided to move my cloud native workloads from my primary datacentre in Utrecht to the secondary in Southport, UK. After getting NSX-T up and running in my nested compute cluster, it was time to begin the installation of Pivotal Container Service (PKS). As PKS 1.1 had just been released, it made sense to go with the latest version.

However, despite the installation initially going well, issues began to arise.

Failed clusters

First I began to deploy a small (three node) cluster. Unfortunately, I hadn’t configured NAT correctly on my PKS tile in Ops Manager, so the cluster failed to deploy:

At this point the cluster was marked as failed, so I set about removing it. Unfortunately that failed too:

Small text, but you get the picture

To move ahead I knew I had to change my networking configuration, so after making the necessary amendments I clicked Apply Changes. Unfortunately, by default PKS runs errands when making changes, such as upgrading all clusters. However despite my cluster being absent (all VMs deleted) but yet still existing in the PKS database, this task also failed – now placing me in a somewhat chicken-and-egg scenario.

I could also disable the necessary errands, but I would still have to remove the faulty PKS cluster at some point, so I might as well start with that before trying to apply the changes again.

Getting Started

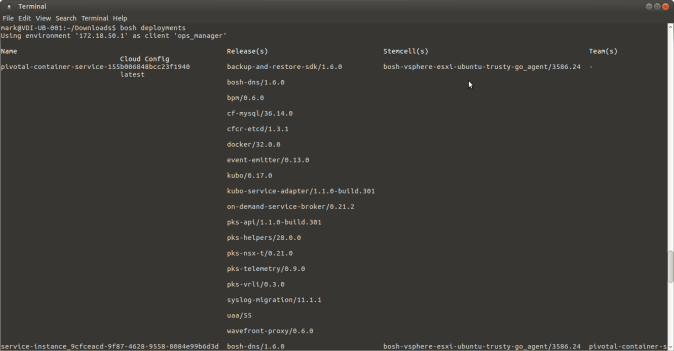

To begin with, list the deployments:

bosh deployments

Here we can see a deployment for PKS:

Now we have the deployment name we can list the VMs (substitute accordingly):

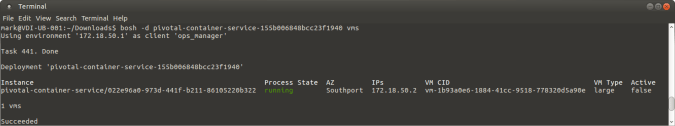

bosh -d pivotal-container-service-155b006848bcc23f1940 vms

This gives us the VM name:

SSH into the PKS VM:

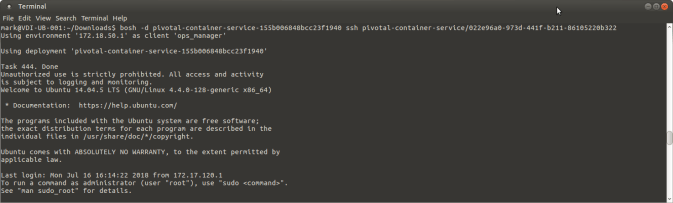

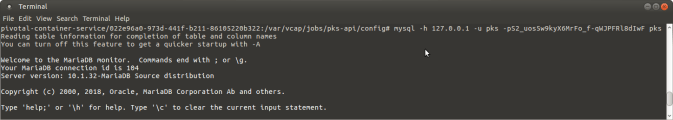

bosh -d pivotal-container-service-155b006848bcc23f1940 ssh pivotal-container-service/022e96a0-973d-441f-b211-86105220b322

SU to root using:

sudo su -

Despite the VMs for our cluster being deleted, PKS still things the cluster exists in the database. Next we will change that.

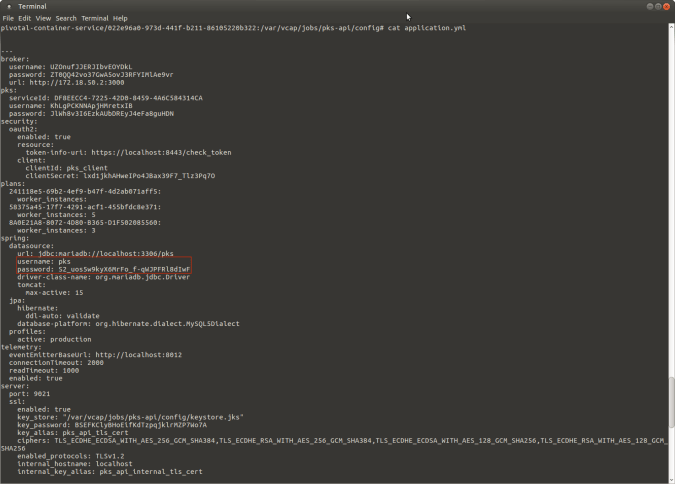

To edit the database, we need the user account and credentials. To find these use:

cat /var/vcap/jobs/pks-api/config/application.yml

Look for the username and password section and make a note of the credentials:

We’ll need these credentials for mySQL

Connect to mySQL using (substitute accordingly):

mysql -h 127.0.0.1 -u pks -pS2_uos5w9kyX6MrFo_f-qWJPFRl8dIwF pks

Please note: there is no space between -p and the password

List the tables:

show tables;

Here we can see a table called cluster. Let’s look inside:

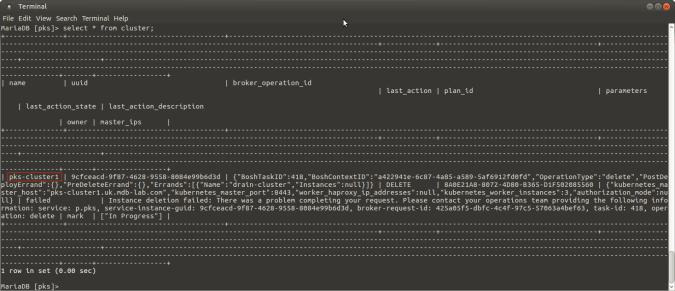

select * from cluster;

There’s our wayward pine

There’s our defunct cluster called pks-cluster1. Remove it by using:

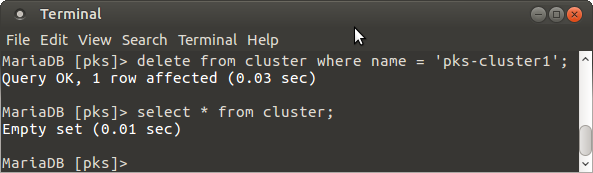

delete from cluster where name = 'pks-cluster1';

Check it has been successfully deleted using:

select * from cluster;

And like that… he’s gone

That’s it!

After deleting my faulty cluster, I could then leave the errand in place and reapply my configuration – ensuring networking was configured correctly.

I’d like to thank my friend and colleague Dan Higham from Pivotal for his help with this.

Thanks for sharing, the only thing I can add to your post that you can SSH to the Ops Manager using putty or something else.

Thanks again man!

LikeLike